For an enterprise CIO, there is no feeling more visceral than the “Go-Live” Sunday night. You have spent six months in committee meetings, burned $3M on consultants, and migrated terabytes of data. But the real test isn't the code—it's Monday morning at 8:00 AM, when 28,000 employees try to log in.

If they can't, you aren't just dealing with a support ticket spike. You are dealing with a resume event. Industry data confirms the stakes: large enterprises lose an average of $14,000 per minute during downtime. That is nearly $1 million lost before your first coffee break. Yet, despite these stakes, McKinsey reports that 70% of digital transformations fail to meet their original goals.

Why is the failure rate so high? Because most IT leaders treat migration as a technical problem. They focus on scripts, APIs, and data mapping. But migration at the enterprise scale—specifically when dealing with 20,000+ users—is not a technical problem. It is a governance and communication problem. The code usually works; it's the tribal knowledge, the undocumented workflows, and the user behavior that break the system.

We recently oversaw a migration for a 28,000-user global enterprise organization. The project had been stalled for six months due to political deadlock. By shifting the focus from “technology first” to “governance first,” we unblocked the initiative and executed the cutover with zero downtime. This is the playbook we used.

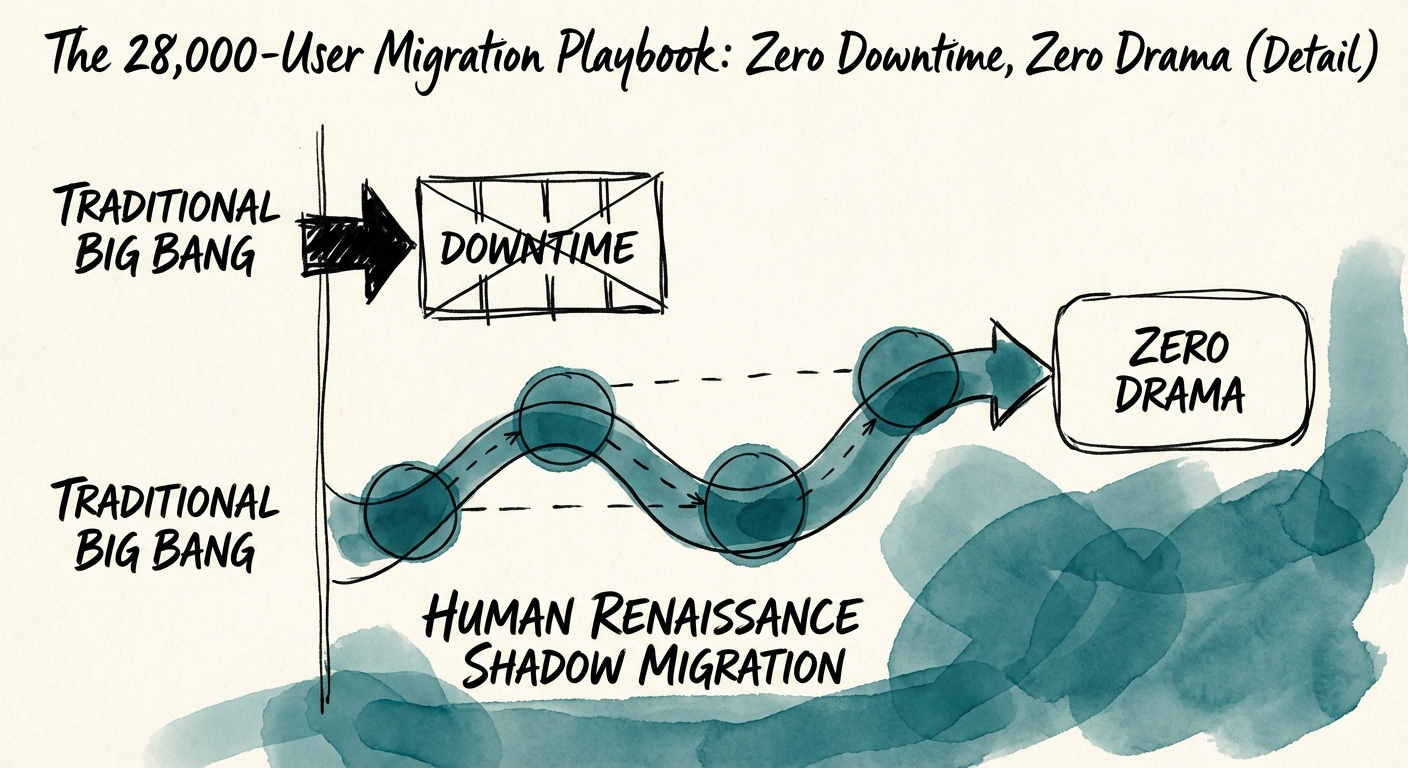

The traditional “Big Bang” migration—shutting down the old system on Friday and praying the new one works on Monday—is operational suicide. It assumes you have captured 100% of the requirements, which is statistically impossible in complex organizations.

Instead of a hard cutover, we utilized a “Shadow Migration” approach. We ran the new environment in parallel with the legacy system for 30 days prior to the official switch. This wasn't just a staging environment; it was a live data mirror. This allowed us to validate tech stack consolidation integrity without user disruption.

During this phase, we discovered that 15% of the user base relied on “gray IT” workflows—undocumented processes that would have broken immediately upon a hard cutover. By catching this in the shadow phase, we remediated the gaps before a single user filed a ticket.

The single biggest failure point in large-scale migrations is Identity and Access Management (IAM). If users can't log in, the system's features don't matter. We enforced a strict IAM hygiene audit 60 days out. We didn't just migrate accounts; we mapped roles to actual usage logs. We found 4,000 “ghost accounts” (users who hadn't logged in for 90+ days) and deprecated them before migration, simplifying the attack surface and reducing licensing costs.

Technical teams often underestimate the “Ticket Spike.” A poorly communicated migration can trigger a 250% increase in support tickets within 24 hours. To prevent this, we established a “Hyper-Care” model:

Success isn't declared when the data is moved. It's declared when the business is operating at full velocity. For the 28,000-user migration, we implemented a strict 48-Hour Governance Lock.

We established a 24/7 command center staffed by decision-makers, not just engineers. If a critical blocker emerged, we didn't schedule a meeting; we made a decision. This reduced our Mean Time to Resolution (MTTR) from hours to minutes.

We also defined a clear Rollback Trigger. If critical system availability dropped below 99.9% for more than 30 minutes, or if data corruption affected more than 0.1% of records, we would execute an automated rollback to the legacy system. Having this safety net allowed the team to move with confidence rather than fear.

The result of this engineered approach? On Monday morning, 28,000 users logged in. There was no crash. No flood of angry emails to the CEO. Ticket volume remained within 15% of the baseline. We achieved the holy grail of IT operations: Zero Drama.

If your digital transformation is currently stuck in committee or facing massive delays, stop adding more project managers. Start engineering your governance. You don't need more time; you need a playbook that respects the complexity of your ecosystem.

Downtime is expensive, but lost trust is potential bankruptcy. Plan accordingly.