For most Operating Partners, the engineering department is the single largest line item on the P&L and the hardest to assess. You can audit a sales team by looking at CAC and quota attainment. You can audit finance by looking at DSO and cash flow. But engineering often remains a "black box" of high salaries and missed deadlines.

The financial stakes are climbing. According to Benchmarkit's 2025 SaaS Report, the median R&D spend for private B2B SaaS companies has stabilized at 34% of revenue—significantly higher than the 23% median for their public counterparts. In a high-interest rate environment, carrying that much weight without visibility into efficiency is a valuation killer.

The opacity has worsened with the rise of Generative AI. Boards are hearing that developers are "50% faster" thanks to Copilot and ChatGPT. Yet, features aren't shipping 50% faster. Why?

We are witnessing a decoupling of individual productivity and system stability. New data from the 2024 DORA State of DevOps Report reveals a startling trend: while AI adoption is up, it is statistically correlated with a 1.5% decrease in delivery throughput and a 7.2% decrease in delivery stability. Developers are writing code faster, but that code is breaking more often, creating larger batch sizes, and clogging the QA pipeline. If you aren't auditing for this, you are paying for speed and getting chaos.

You do not need to read code to audit a technical team. You need to look at the exhaust of the engineering machine. We use a three-pillar diagnostic that bypasses the "lines of code" vanity metrics and focuses on economic outcomes.

First, benchmark your R&D intensity against the rule of 40 context. If a portfolio company is spending >35% of revenue on R&D but growing at <20%, you have a capital allocation failure. High R&D spend is only acceptable if it correlates with high growth or high retention (NRR >110%).

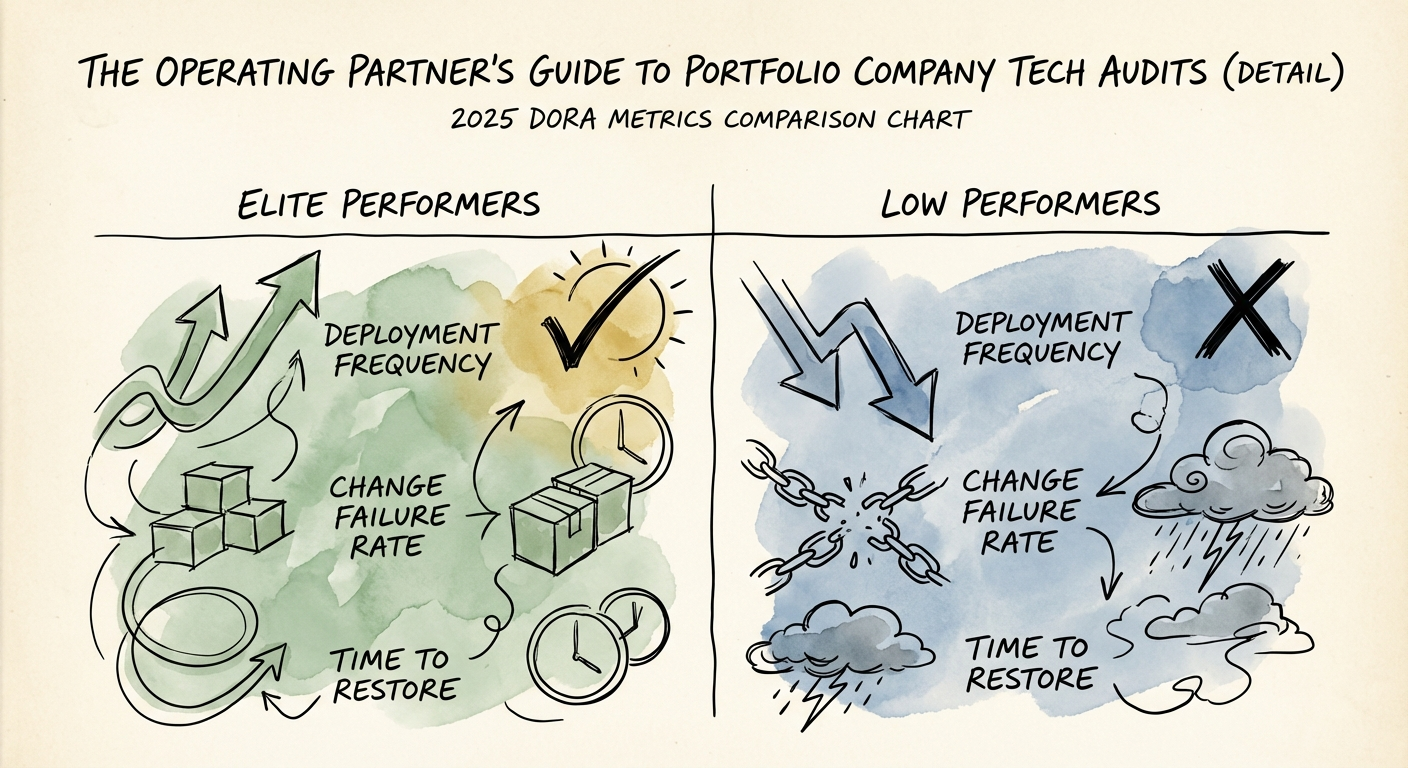

Forget "story points" or "hours worked." The only valid measures of engineering performance are the four DORA metrics, which have become the industry standard for high-performing technology organizations:

If your CTO cannot produce these four numbers on a dashboard today, they are flying blind.

A hidden killer of deal value is technical debt. We assess this via the Rework Rate—the percentage of developer time spent fixing old code versus building new features. In healthy firms, rework is <20%. in distressed assets, we often see it spike above 40%. This is effectively a 40% tax on every payroll dollar you deploy into engineering.

When you take over a new asset or initiate a turnaround, execute this non-technical audit immediately. Do not wait for the roadmap to slip.

Mandate the implementation of a DORA dashboard. Tools like Sleuth, LinearB, or simple Jira exports can generate this data. Stop accepting qualitative status updates ("We're making good progress") and demand quantitative flow metrics. If the Change Failure Rate is above 15%, halt all new feature development and prioritize a reliability sprint. You cannot build a skyscraper on a cracking foundation.

Audit the usage of AI tools. Are they being used to generate boilerplate code that bloats the codebase (bad), or to write unit tests and documentation (good)? The Google Cloud DORA research explicitly warns that AI adoption without improved testing protocols leads to instability. Require your engineering leaders to demonstrate how their testing infrastructure has evolved to handle the increased velocity of AI-generated code.

Your job is not to manage the engineers; it is to manage the investment in engineering. By focusing on R&D-to-Revenue ratios, DORA stability metrics, and Rework Rates, you turn the black box into a dashboard. The goal is simple: ensure that every dollar of R&D spend translates into customer value, not technical debt.