You have likely lived through this scenario: You hire a Senior Engineer from a FAANG company. They aced the whiteboard interview, reversed a binary tree in O(n) time, and spoke eloquently about microservices architecture. On paper, they are a perfect fit.

Ninety days later, they haven’t shipped a single meaningful feature.

They spend their days arguing about code style in Pull Requests, refactoring working legacy code because it "wasn’t elegant," or complaining that your CI/CD pipeline isn’t Google-grade. Meanwhile, your roadmap is stalled.

You didn’t hire a bad engineer. You hired a False Positive.

The traditional technical interview process—heavy on algorithmic puzzles and whiteboard interrogation—was designed by massive corporations to filter thousands of applicants, not to identify the scrappy, product-minded engineers needed in a Series B or C company. According to data from Leadership IQ, 46% of new hires fail within 18 months. Crucially, only 11% fail because of a lack of technical skill. They fail due to coachability, temperament, and an inability to deliver results within the constraints of a scaling business.

Furthermore, reliance on high-pressure whiteboard sessions is scientifically flawed. A study by NC State University and Microsoft found that the "whiteboard effect" measures performance anxiety, not coding competence. When engineers were watched, their performance dropped by more than 50% compared to solving the same problems in private. You aren’t testing how well they code; you’re testing how well they handle public speaking while solving riddles.

For a scaling company, a bad technical hire is not just an annoyance; it is a P&L disaster. As we explored in The Real Cost of Bad Hires, the financial impact often exceeds 30% of the first-year salary—but the opportunity cost of missed product milestones is incalculable.

To predict how a candidate will perform in their first 90 days, you must stop asking them to solve puzzles and start asking them to do the job. We call this the 90-Day Simulation.

This is not a take-home test that consumes their weekend (which biases your pool against senior talent with families). It is a collaborative, 60-to-90-minute "Work Sample" session designed to mimic a real Tuesday morning at your company.

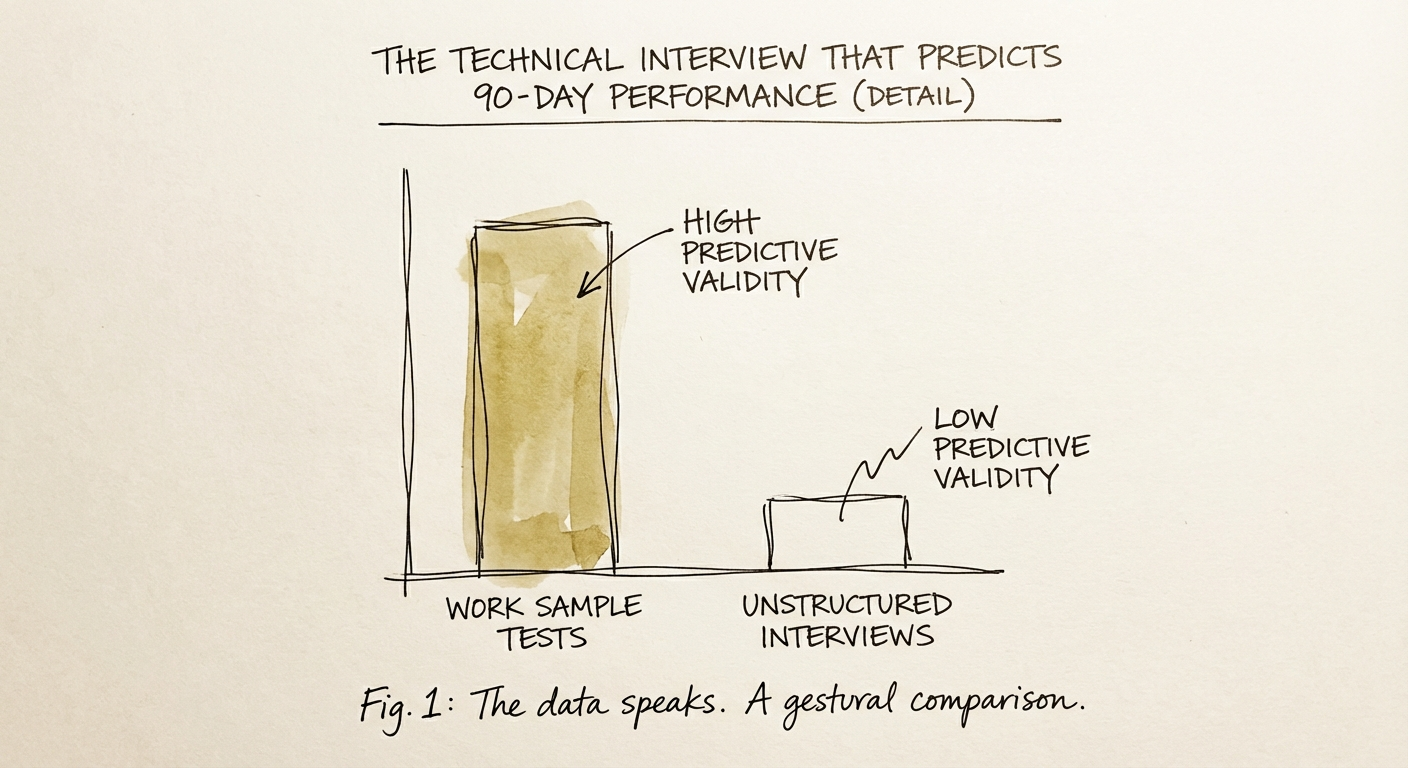

Research from Schmidt & Hunter has long established that work sample tests have significantly higher predictive validity (approx. 0.54) than unstructured interviews (0.38). Here is how to construct one that works:

While they code, your hiring manager sits with them, acting as a peer, not a proctor. This reveals the invisible traits that LeetCode misses:

In a Series B/C environment, you need engineers who can navigate messy, existing codebases without becoming paralyzed. The Simulation filters out the "Greenfield Architects" who only thrive when building from scratch.

The output of the 90-Day Simulation isn’t just "did the code pass?" It is a structured scorecard that predicts future friction.

You do not need HR approval to change your technical round. Instruct your Engineering Lead to scrap the "reverse a string" question for the next candidate.

Step 1: Fork a small, non-proprietary service that mimics your architecture.

Step 2: Introduce a logical bug (not a syntax error) and a missing feature.

Step 3: Run the simulation. Tell the candidate: "We are pair programming. I am your teammate. If you are stuck, ask me. I have context you don't."

By shifting from interrogation to collaboration, you stop hiring for memorization and start hiring for execution. As you scale, your ability to assess actual engineering output—not just theoretical knowledge—will determine whether your product roadmap hits its deadlines or dies in technical debt reviews. For a broader look at assessing your team's overall health, consider running The Non-Technical Audit across your organization.

Hiring is the most expensive activity in your company. Stop gambling on puzzles.