If I asked to see your Incident Response (IR) plan right now, you would likely pull up a pristine PDF. It probably has a version control table, a neat escalation tree, and a sign-off from your CISO dated six months ago. It passed your last SOC 2 audit with flying colors.

And it is completely useless.

I say this not to be provocative, but because I have sat in the war room at 3:00 AM with CIOs who are watching their careers evaporate in real-time. They had the plan. But when the ransomware locked the Active Directory, the plan was stored on the network drive that just got encrypted.

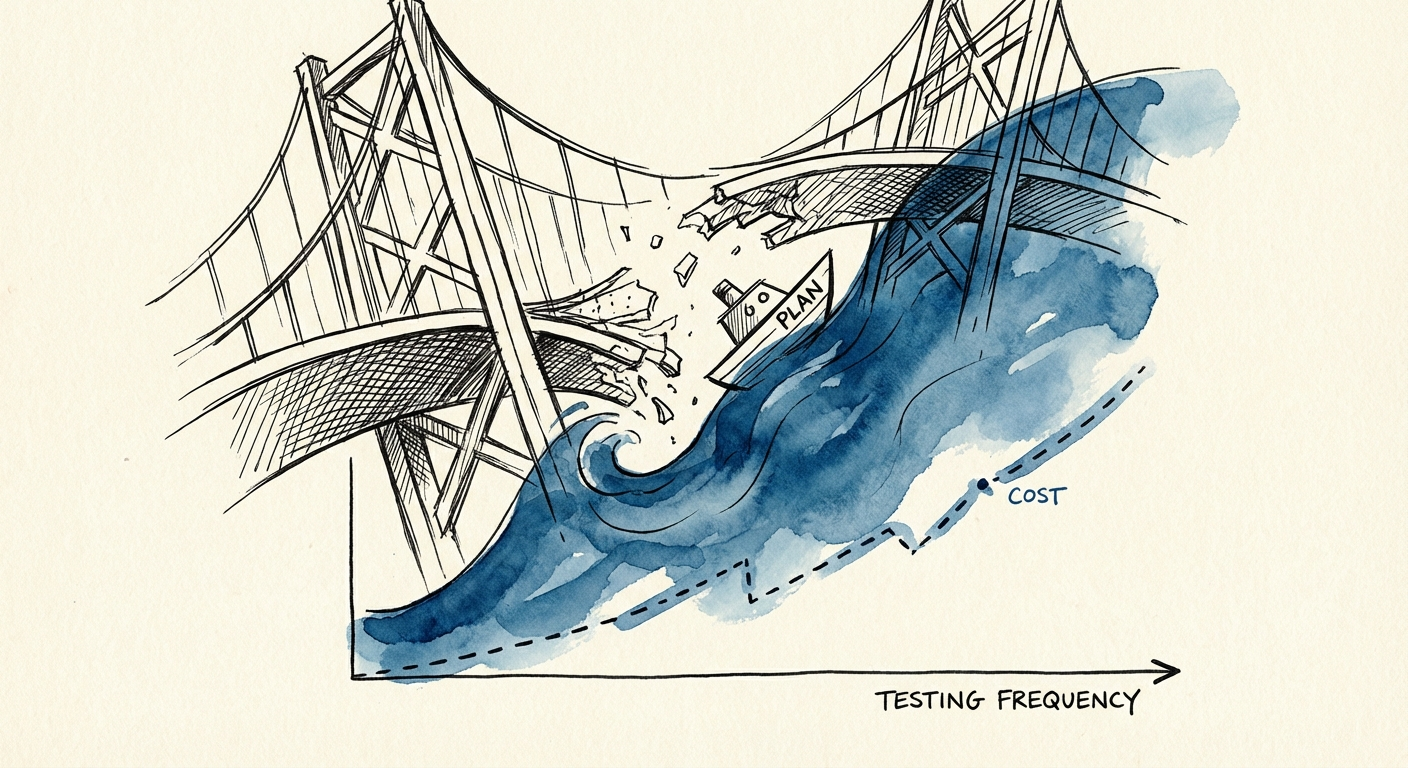

For most enterprise leaders, Incident Response is a compliance box to check. You need it for insurance, you need it for the board, and you need it for auditors. But compliance does not equal capability. IBM’s 2024 Cost of a Data Breach Report reveals a terrifying reality: the average cost of a data breach has hit $4.88 million. However, there is a $2.03 million delta between organizations that just have a plan and those that actually test it.

The difference isn't the document. It's the muscle memory.

Most IR plans are designed for a polite theoretical exercise where the phone tree works, the VPN is stable, and the threat actor waits for you to wake up. Real attacks are chaotic, dirty, and specifically designed to sever the very tools you rely on to respond. If your plan assumes you can use Slack to coordinate the response to an attack that just compromised your Okta instance, you don't have a plan—you have a fantasy.

When we conduct cybersecurity risk assessments for distressed portfolios, we rarely find a lack of tools. We find a lack of operational reality. Here are the three specific failure points where standard IR plans collapse.

Your plan likely says: "Notify the Core Response Team via email and Slack." But in a compromised environment, you must assume your primary communications channels are hostile territory. Threat actors often monitor internal comms to track your response actions.

The Data: According to Ponemon and IBM, the Mean Time to Contain (MTTC) a breach extends to 304 days for organizations without functional IR teams and testing. That is nearly a year of exposure. Why? Because the first 48 hours are spent figuring out how to talk securely.

The Fix: You need an "Out-of-Band" (OOB) communication protocol. Signal groups on personal devices, or a dedicated, completely air-gapped M365 tenant solely for crisis response. If you haven't bought the burner phones yet, you aren't ready.

Security best practices demand Least Privilege Access and strict MFA. But during a catastrophic outage or ransomware event, your MFA provider might be down, or your admin credentials might be locked out. I’ve seen teams lose four critical hours waiting for a vendor support ticket to reset a root password.

The Benchmark: Downtime costs enterprise firms between $300,000 and $5 million per hour depending on the sector. Every minute you spend fighting your own security controls is bleeding EBITDA.

Your plan lists who is on the call, but does it list who has the authority to kill revenue? If you need to sever the connection to your biggest customer to stop lateral movement, can the VP of Engineering make that call at 2 AM? Or do they need to wake up the CEO?

In the horror stories of due diligence, we see breaches that ballooned from $50k incidents to $50M catastrophes simply because the technical lead was afraid to shut down the production server without written permission.

You cannot buy your way out of this with more tools. You must engineer your way out with process. Here is the operational framework to turn your IR plan into a weapon.

Annual tabletops are insufficient. You need quarterly, scenario-based drills that hurt. Don't just talk through it; actually simulate the pain.

Q1: Ransomware encrypts the ERP.

Q2: Insider threat leaks customer database.

Q3: Vendor supply chain attack (e.g., your MSP is compromised).

Q4: Executive kidnapping/extortion.

Invite your legal counsel and PR team. The technical fix is often the easy part; the regulatory disclosure timeline is where the liability lives.

Do not rely on cloud documentation. Your Core Crisis Team needs a physical or offline-encrypted digital "War Chest" containing:

Burnout is a security risk. If your "Level 1" responder is an exhausted engineer who has been awake for 24 hours, they will miss the alert. We have written extensively on engineering on-call rotations that prevent burnout. A tired team makes mistakes; a tired team during a breach causes disasters.

Transition Tom, your job is not to configure the firewall. Your job is to ensure the governance exists to survive the fire. The data is clear: Organizations that regularly test their IR plans save an average of $2 million per breach. That is the difference between a bad quarter and a company-ending event.

Stop polishing the document. Start breaking the system. It is better to fail in a conference room on a Tuesday afternoon than in the headlines on a Sunday morning.